It haunted me. Like a weird posture peddler, the ad followed me everywhere I went online. Apparently one visit to Lumo’s site a few months ago was enough for Google’s ad network to put a Lumo ad in front of me on what felt like every site I visited.

I probably wouldn’t even have noticed if I wasn’t already intrigued. I have wanted to fix my posture for years. When I see someone about to take a photo of me, I make a conscious effort to stand straighter. But when I see the resulting photos I still often feel like I’m not standing as tall as I would like.

And yet I hesitated. Realtime feedback aside, I like to know that I can analyze my data later and compare it with data from other sources in order to get a fuller picture and run experiments. I had read that the LumoBack API wasn’t ready, so despite promises, I was concerned that the data would be stuck in the device, not readily available for external analysis. Every now and then I would do a search for LumoBack API, and finally I found the droids I was looking for. I couldn’t get access to the API or its documentation without an account, but it was enough to make me take the plunge.

Initial Experience

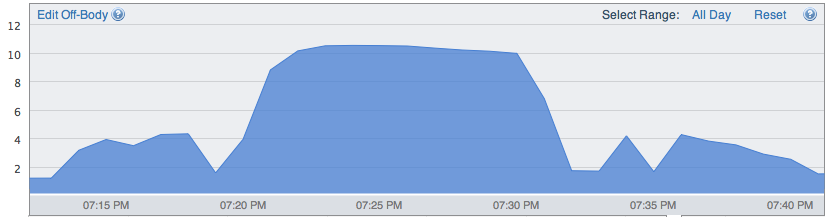

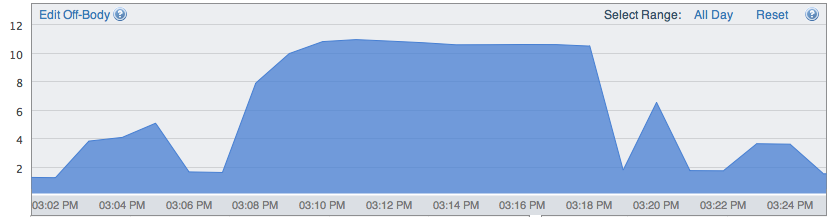

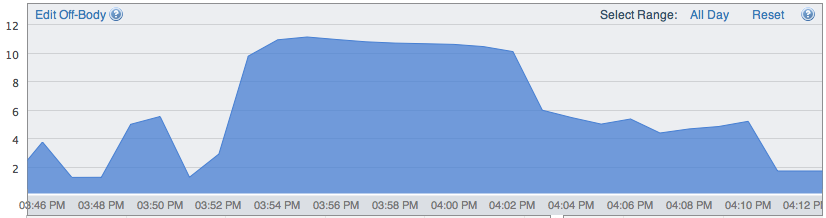

Lumo is pretty good at detecting whether I’m sitting, standing, or walking, although it doesn’t always pick up on the transitions between them very quickly. I found that occasionally it would take up to 30 seconds to realize I had stood up from a sitting position. It is possible to force it to know that you’re sitting or standing with a simple swipe, which hopefully teaches it to be quicker in the future.

There are several levels of sensitivity, depending on how much you want to be able to slouch before Lumo corrects you. I went immediately to the most sensitive setting, which turns Lumo into an all-out posture Nazi. While you’re sitting, that is.

Lumo is much more strict when you are sitting than when you are standing or walking, even on the most sensitive setting. I have to lean quite a lot while I’m standing before Lumo reacts. There is likely a good reason for this; as I move through my daily life, there are times where it is certainly okay to be a little out of position. That said, it does give the perception that the realtime feedback is less useful for standing and walking posture than it is for sitting posture.

Further tests may help determine whether this perception is warranted.

Update: after another day with Lumo and some additional introspection, I’m finding that my posture concerns when sitting are primarily with my lower back, which is where the LumoBack excels. When standing, my problem is more often with the upper back/shoulders, which is outside of what Lumo primarily tracks. When walking or driving, Lumo seems to ignore posture.

Presentation

Out of the box, the presentation is nicely done. The setup instructions are simple and straightforward. The calibration is easy. No problems connecting Bluetooth to my iPhone 5 running iOS 7.0. My LumoBack model is version 3.0.5.

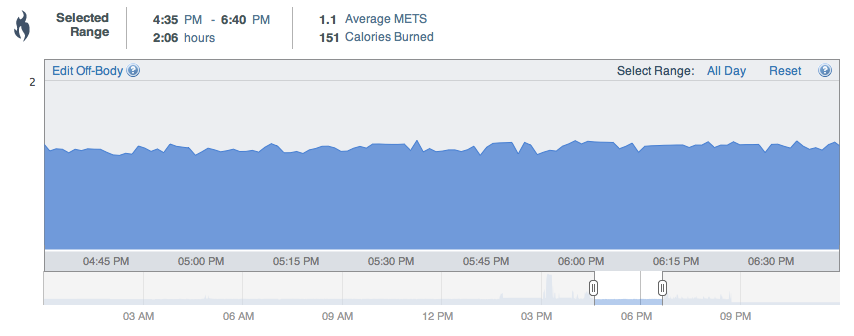

Car Trouble

Wow. Apparently car seats are terrible for good posture. Maybe it’s just my car seat, although I did also have similar trouble in a rental car recently. Previously I thought I had my car seat set in a position that would help me to sit up better, but apparently I couldn’t have been more wrong. While I can very quickly find my good posture in a normal chair, in the car it was nearly impossible. I spent a good 5-10 minutes adjusting my car seat and my posture to get Lumo to stop burning a hole in my lower back and still couldn’t maintain a good position for more than about 30 seconds at a time.

I would have to lean forward against the seat belt and away from the back of the seat, which is curved in a way that doesn’t allow for a straight back. The whole experience was incredibly awkward and frustrating. I tried to maintain posture as best I could and adjust the seat to try to support it.

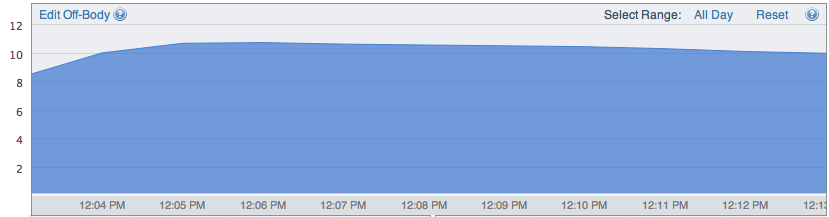

One of the more interesting and unique metrics the LumoBack has to offer is that it can automatically detect the amount of time you spend in the car. Presumably it determines this purely through accelerometer data. So before the car actually starts moving, Lumo doesn’t know you’re in a car and just assumes you’re sitting normally just like any chair.